Table of contents

The ORCA Benchmark Evaluates How Well AIs Deal with Everyday Math

Report Highlights

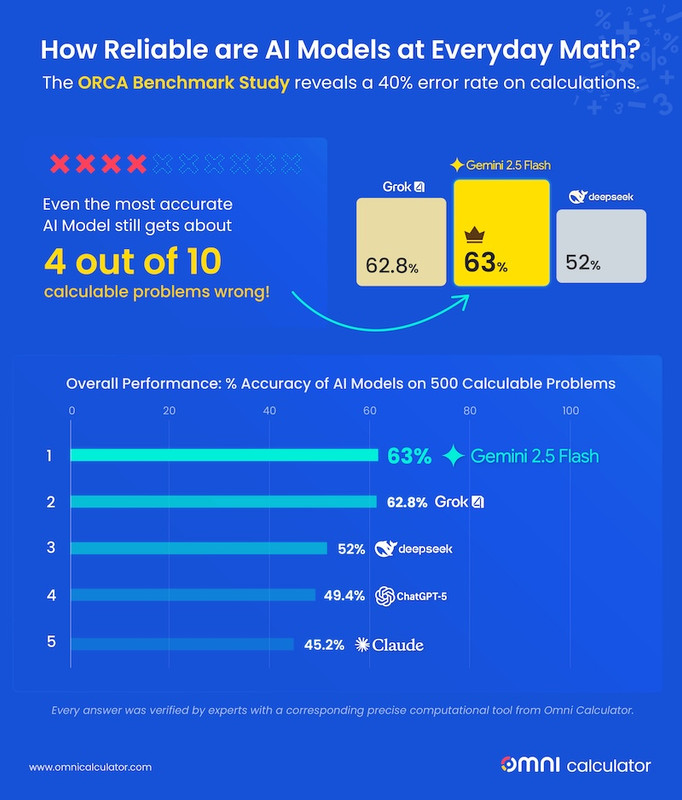

- ORCA Benchmark reveals you have a 40% chance of getting a wrong answer when you ask AI for everyday math.

- Why AI chatbots give detailed, confident explanations for the wrong mathematical answers.

- Why the biggest AI models are failing at basic, everyday math.

- How a simple rounding error reveals the core limitation of large language models.

- Why AI is an unreliable calculator for your finances and your health.

That's the truth we uncovered after testing today's five leading AIs on 500 real-world problems.

From calculating a tip to projecting a business ROI, we're trusting AI with our most basic calculations. Our data reveals that trust could be dangerously misplaced.

The ORCA (Omni Research on Calculation in AI) Benchmark, a comprehensive test spanning finance, health, and physics, reveals that no AI model scored above 63%. The leader, Gemini, still gets nearly 4 out of 10 problems wrong. The most common culprits? Not complex logic, but simple rounding errors and calculation mistakes.

🔎 AIs aren't failing advanced calculus; they're failing the math that runs our daily lives.

- AIs are still far from perfect at calculations. None of the models scored higher than 63%, meaning they still get about 4 out of 10 calculatable problems wrong.

- Gemini 2.5 Flash leads, with Grok 4 a close second. Gemini 2.5 Flash achieved the highest overall accuracy (63%), narrowly beating Grok 4 (62.8%).

- DeepSeek V3.2 (52%) occupies the middle ground, performing significantly better than the lowest tier but still 10 percentage points behind the leaders. While for ChatGPT-5 (49.4%) and Claude Sonnet 4.5 (45.2%), more than half of their total answers were incorrect!

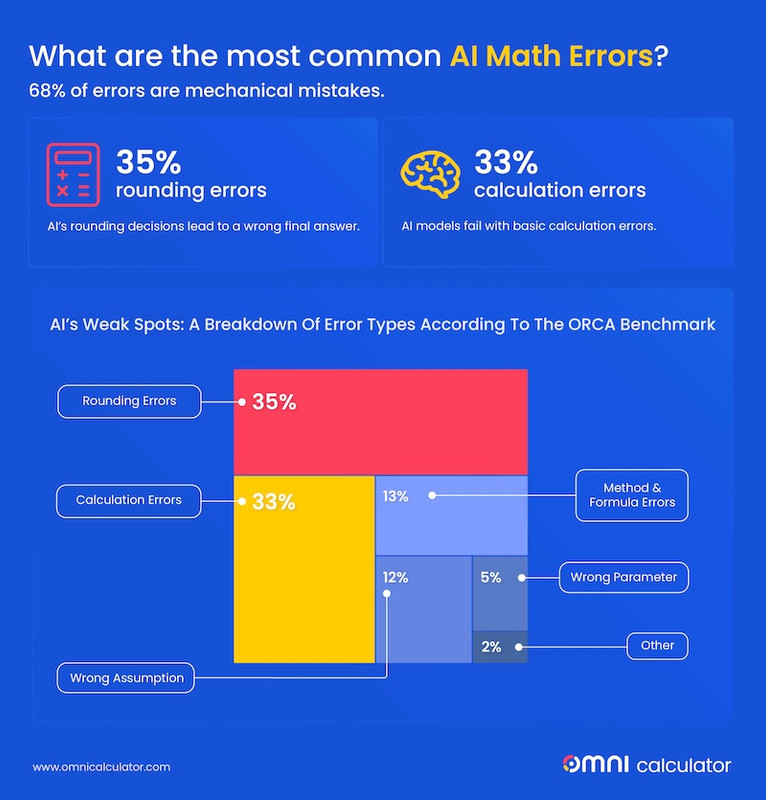

- The most common errors are mechanical. Rounding issues (35%) and Calculation mistakes (33%) dominate.

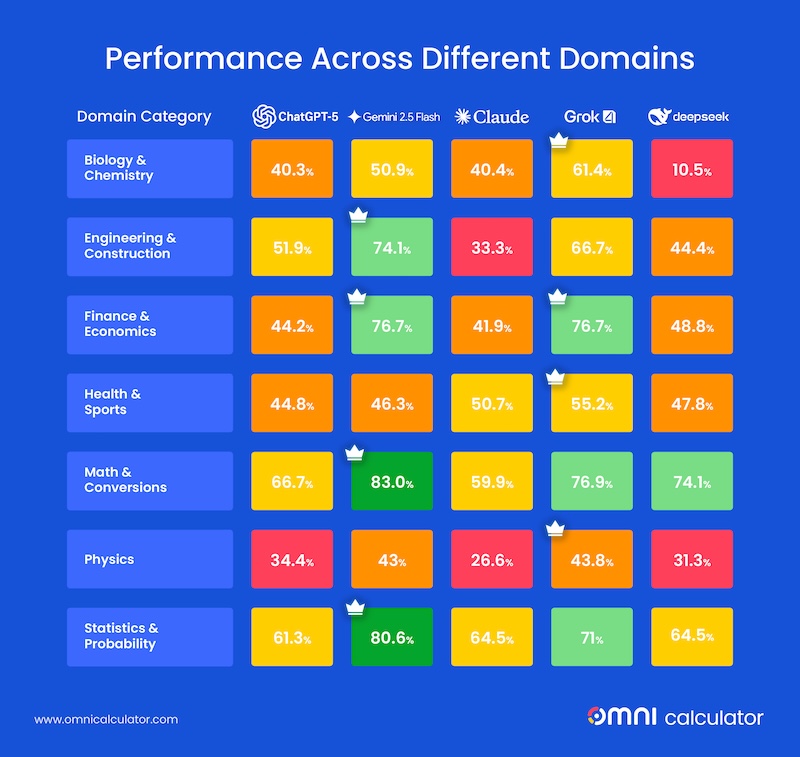

- Pure math is easier than applied math. AI performed best in straightforward Mathematics & Conversions, and Probability & Statistics, but struggled with applied problems in areas like Physics and Health & Sports. The challenge lies in "translating" a real-world situation into the right formula — and that's where the most significant errors happen.

- The largest performance gaps occur in Finance and Economics. In these domains, Grok and Gemini achieved accuracy rates of between 70% and 80%. In stark contrast, the other three models (ChatGPT, Claude, and DeepSeek) frequently struggled to achieve an accuracy rate above 40% on the same problems.

It starts small. You ask AI to soften an angry email or brainstorm a Sunday brunch menu. You’re impressed. Soon, you’re using AI as a helpful daily assistant: checking homework, planning trips, and building diet plans.

But the dynamic shatters the first time you ask something that requires a hard number: “What’s my total loan interest?”

Suddenly, the confident AI chatbot is asked to be a precise calculator. And that’s when doubt creeps in: Is this number actually correct?

This is the critical question we had to answer. At Omni Calculator, we've spent years building tools for these exact types of problems. So while other benchmarks, such as AlphaGeometry, test AI on high-level math Olympiad problems, we created the ORCA Benchmark to test the math that actually runs your everyday life.

For over a decade, Omni Calculator has been built to solve real-world problems. To create the ORCA Benchmark, we leveraged our deep expertise by assembling a one-of-a-kind team of scientists and experts. Together, they developed 500 prompts based on genuine, calculable challenges our users face every day.

The ORCA Benchmark Team

-

Anna Szczepanek, PhD

MathematicsInstitute of Mathematics, Jagiellonian University; Chair of Applied Mathematics. Within the field of AI, her work has touched upon the mathematical foundations of modeling influence in social networks.

-

Joanna Śmietańska-Nowak, PhD

Computational Physics & Machine LearningPhysicist with postgraduate studies in Machine Learning, specializing in computational methods and crystallography. She has hands-on experience in neural network design, predictive modeling, and model evaluation, with training in LLMs and prompt engineering for academic applications.

-

João Santos, PhD

ResearcherAn experienced researcher of the Humboldt Foundation, he now applies his analytical expertise in the AI sector as a Prompt Writer with Outlier.ai, specializing in guiding and evaluating advanced large language models.

-

Wojciech Sas, PhD

Experimental & Applied PhysicsResearcher at the Institute of Nuclear Physics PAN, investigating the magnetic properties of nanostructures and thin films. His work combines advanced experimentation with AI-driven data analysis to uncover complex patterns in material behavior.

-

Julia Kopczyńska, MSc (PhD Candidate)

Biomedical Research & Data AnalysisResearcher specializing in microbiology, chronic diseases, and inflammation. A published author in peer-reviewed journals and a health expert quoted in The Guardian, she is currently exploring the application of AI and data analysis for biomedical research.

-

Dawid Siuda, BA

Finance & Business ITFinance expert quoted in Polish and international media, including The New York Post. He is currently pursuing a Master’s degree in Business IT with a focus on the practical applications of Artificial Intelligence.

-

Claudia Herambourg, BA (MA Candidate)

Computational Linguistics & NLPHolds a dual Bachelor's degree in English and Mathematics and is completing her Master's in Linguistics with a thesis on Natural Language Processing (NLP). She is working to unravel the mathematical laws governing semantic change using large language models.

Editorial Process

As with all Omni Calculator content, the ORCA Benchmark report went through a rigorous review and proofreading process. Learn more in our Editorial Policies.

-

Reviewed by: Hanna Pamuła, PhD

Mechanical Engineering & BioacousticsLeading the Research and Content Department, she blends academic rigor with real-world AI insight. Her doctoral research explored advanced machine learning and deep learning models for image and sound processing.

-

Proofread by: Steven Wooding

Editorial Quality Assurance Coordinator at Omni Calculator. A physicist by training, he has worked on diverse projects, including environmentally aware radar and the UK vaccine queue calculator. He applies his passion for data analysis to ensure all content meets the highest standards of clarity and accuracy.

We put five leading AIs to the test and created the ORCA Benchmark. Instead of using complex, academic math problems, we built our test around 500 practical questions that everyday people might actually ask.

The chosen models:

- ChatGPT-5 (OpenAI);

- Gemini 2.5 Flash (Google);

- Claude 4.5 Sonnet (Anthropic);

- DeepSeek V3.2 (DeepSeek AI); and

- Grok-4 (xAI).

To ensure our findings accurately reflect the real-world user experience, we used the most advanced models available to the general public for free. We did not use paid-tier or private prototypes. This approach provides a genuine assessment of the mathematical capabilities you can expect from these AI chatbots right now.

Our Methodology

We designed our experiment to be as fair and realistic as possible by following three key rules:

- Each AI was given the same 500 question prompts.

- We interacted with each AI through its standard public interface, just like a regular person would.

- We scored each AI on its very first answer. We didn't use special prompting techniques or give them a second chance, mimicking a typical user's one-and-done query.

How We Categorized the Questions

To compare results by subject, we categorized the 500 questions into seven areas of computable problems that represent different facets of everyday life.

The seven domains and the number of prompt problems in each are:

Domain Category | Number of prompts | |

|---|---|---|

Biology & Chemistry | 57 | |

Engineering & Construction | 27 | |

Finance & Economics | 43 | |

Health & Sports | 67 | |

Math & Conversions | 147 | |

Physics | 128 | |

Statistics & Probability | 31 |

Grading the Answers

Every single question prompt has one, and only one, correct answer, which we know because it comes from our own verified Omni Calculator tools. This gave us a perfect "answer key" to check the AIs against.

To get a question right, an AI's final answer had to match our verified result exactly. We were careful to consider different rounding methods and units, but we didn't give partial credit. The answer had to be spot-on.

After putting the five leading AI models through this comprehensive test, the results are in: they're just not that good at everyday math yet. Despite their advanced technology, they often fail at the simple task of getting the numbers right.

If you ask any of these AI chatbots 10 calculation questions, you should expect about 4 of the answers to be wrong.

❓ Ask yourself: would you want advice on your mortgage from a financial expert who is wrong half of the time?

Overall Performance

-

Gemini and Grok Excel in Finance

The results revealed two winners for your wallet: Gemini and Grok. While other models showed general math skills, these two achieved accuracy rates above 75%, demonstrating an edge in the domain of Finance & Economics.In other words, if you're asking a question about loans, investments, or budgeting, Gemini and Grok are the models most likely to get it right.

-

Top and Bottom Performing Domains

The most consistently high-scoring domains were Math & Conversions, where most models performed above 65%. In stark contrast, Physics, Health & Sports, and Biology & Chemistry were the most challenging, with most models failing to achieve 50% accuracy.The challenge lies in "translating" a real-world situation into the right formula — and that's where the most significant errors happen. Straightforward, “clear” math problems are much easier for AIs to solve correctly.

Performance Across Different Domains

Model-by-Model Breakdown

- Gemini 2.5 Flash and Grok-4 were the top-tier performers, leading in most categories.

- Gemini also claimed a decisive victory in Statistics & Probability and in Math & Conversion, achieving an accuracy of 80.6% and 83%, respectively.

- DeepSeek V3.2 showed the most volatile performance. While it was strong in areas like Math & Conversion, its accuracy plummeted in Biology & Chemistry to just 10.5% and Physics at 31.3%, making it an unreliable choice for those sciences.

- Claude Sonnet 4.5 had the lowest scores in general, not even scoring above 65% or above in any domain category.

- ChatGPT-5 delivered moderate but more consistent results across the board.

Consistency and Volatility

The results also highlight how consistently the models performed within each domain:

- High-Consistency Domains: In Math & Conversions, all models performed at a similar level, 60% or above.

- High-Volatility Domains: In Biology & Chemistry and Physics, the gap between the best and worst models was enormous. For example, in Biology & Chemistry, Grok-4's accuracy at 61.4% was roughly 5.85 times higher than that of DeepSeek V3.2 at 10.5%.

The ORCA Benchmark study not only focuses on ranking the AI’s math capabilities, but also analyzes the types of errors they make. Thanks to this analysis, we gain deeper insight into not only when AIs get math wrong, but also why and how.

A Look at 4 AI Error Types and Sample Prompts

-

The "Sloppy Math" Errors (68% of all mistakes)

This is the most common type of failure, where the AI understands the question and knows the formula but trips over the computation itself.-

Precision & Rounding Issues (35%): The AI gets close, but small rounding decisions lead to an incorrect final answer.

- Example: When calculating a runner's VO2 max, Grok-4 produced 47.89 instead of 47.86. The difference seems tiny, but it resulted from truncating numbers mid-calculation — a fatal flaw in precise math.

- Prompt: What’s my VO2 max if I run 10.564 km in 45:28 minutes?

- VO2 max runners calculator result: 47.86 mL/kg/min

- Grok 4: 47.89 mL/kg/min

- Example: When calculating a runner's VO2 max, Grok-4 produced 47.89 instead of 47.86. The difference seems tiny, but it resulted from truncating numbers mid-calculation — a fatal flaw in precise math.

-

Calculation Errors (33%): Simple arithmetic failures.

- Example: Gemini miscalculated an improper fraction conversion, incorrectly calculating the remainder as 12,334,890 instead of 12,344,890 due to a simple arithmetic error in subtraction.

- Prompt: Convert to mixed number 987654321/12345689.

- Improper fraction to mixed number calculator result: 79 + 12344890/12345689 = 79 + 726170/726217

- Example: ChatGPT-5 miscomputed a lottery probability calculation, incorrectly calculating the odds as 1 in 401,397 instead of 1 in 520,521 due to a massive error in combinatorial math.

- Prompt: For a lottery where 6 balls are drawn from a pool of 76, what are my chances of matching 5 of them?

- Lottery calculator result: 1 in 520521

- ChatGPT-5: 1 in 401397

- Example: Gemini miscalculated an improper fraction conversion, incorrectly calculating the remainder as 12,334,890 instead of 12,344,890 due to a simple arithmetic error in subtraction.

-

-

The "Faulty Logic" Errors (26% of all mistakes)

These errors are concerning, as they indicate that the AI is struggling to grasp the core logic of a problem.- Method or Formula Errors (14%): Using a completely wrong mathematical approach.

- Example: Asked for the area of a hexagram, DeepSeek used a formula for a simple hexagon, yielding 21.65 cm² instead of the correct 129.9 cm².

- Prompt: What is the area of a hexagram with side length 5 cm?

- Star shape calculator result: 129.9 cm^2

- DeepSeek V2.3: 21.65 cm^2

- Example: Asked for the area of a hexagram, DeepSeek used a formula for a simple hexagon, yielding 21.65 cm² instead of the correct 129.9 cm².

- Wrong Assumptions (12%): The AI inserts its own flawed logic.

- Example: To predict a puppy's adult weight, DeepSeek incorrectly assumed a 3 kg puppy was 25% of its final size, guessing 26.32 lbs. The correct method, using a growth formula, gives 57.32 lbs.

- Prompt: What will the adult weight be in lbs of a 3 kg 6-week-old puppy?

- Dog size calculator result: 57.32 lb (51.59-63.05 lb)

- DeepSeek V2.3: 26.32 lb

- Example: To predict a puppy's adult weight, DeepSeek incorrectly assumed a 3 kg puppy was 25% of its final size, guessing 26.32 lbs. The correct method, using a growth formula, gives 57.32 lbs.

- Method or Formula Errors (14%): Using a completely wrong mathematical approach.

-

The "Misreading the Instructions" Errors (5% of all mistakes)

These errors highlight a failure to parse the details of a question correctly.- Wrong Parameter Errors: Misusing the given numbers.

- Example: In an LED circuit problem, Claude mistakenly applied a 5 mA current to each of the 7 LEDs, instead of using it as the total for the circuit. This led to a power calculation of 294 mW — seven times higher than the correct answer of 42 mW.

- Prompt: Consider that you have 7 blue LEDs (3.6V) connected in parallel, together with a resistor, subject to a voltage of 12 V and a current of 5 mA. What is the value of the power dissipation in the resistor (in mW)?

- LED resistor calculator result: 42 mW

- Claude Sonnet 4.5 result: 294 mW

- Example: In an LED circuit problem, Claude mistakenly applied a 5 mA current to each of the 7 LEDs, instead of using it as the total for the circuit. This led to a power calculation of 294 mW — seven times higher than the correct answer of 42 mW.

- Incomplete Answers:

- Example: When asked about the boiling point of water at Machu Picchu, Claude gave a range (194-196 °F) instead of calculating the precise answer (197.45 °F).

- Prompt: At what temperature should I boil water when I reach the summit of Machu Picchu (7,970 ft)?

- Boiling point at altitude calculator result: 197.45 °F

- Claude Sonnet 4.5: 194-196 °F

- Example: When asked about the boiling point of water at Machu Picchu, Claude gave a range (194-196 °F) instead of calculating the precise answer (197.45 °F).

- Wrong Parameter Errors: Misusing the given numbers.

-

The "Giving Up" Error

- Refusal/Deflection: Sometimes, the AI simply won't engage.

- Example: When faced with a complex physics problem, ChatGPT-5 refused to answer, claiming insufficient data, despite all necessary parameters being provided and a solution being possible.

- Prompt: Consider that a metal composite has Poisson's ratio of 1.04, Young's modulus in x-direction equals to 78 MPa, Young's modulus in y-direction equals to 132 MPa, and shear modulus in xy-plane equals to 112 MPa. What will be the stress concentration factor of such metal composite? Write your answer with five significant figures.

- Stress concentration factor calculator result: 1.3924

- ChatGPT-5: It’s not determinable from the information given.

- Example: When faced with a complex physics problem, ChatGPT-5 refused to answer, claiming insufficient data, despite all necessary parameters being provided and a solution being possible.

- Refusal/Deflection: Sometimes, the AI simply won't engage.

The core issue lies in the transformer architecture that powers most large language models. Transformers process information by evaluating the relationships between words (or symbols) in a sequence, a mechanism known as "self-attention." They are masters of pattern prediction, not logical calculation.

💡 Think of it this way: an AI doesn't "reason" that 2 + 2 = 4. Instead, it has seen the sequence "2 + 2 =" followed by "4" so many times in its training data that it correctly predicts the answer. It's mimicking, not computing.

This insight explains the errors:

- Calculation & Rounding: The model treats numbers as tokens in a sequence, not as quantities with precise values. It doesn't have an innate understanding that rounding 31.874 to 31.8 is mathematically incorrect.

- Practical Knowledge Gaps: In a real-world LED circuit example above, an AI might fail to grasp that a 5 mA current represents the total for a system, a concept obvious to anyone with basic electronics knowledge. It misses the contextual meaning.

The fine-tuning process (which teaches the AI to respond helpfully) adjusts how it reasons through language, but doesn't change its core pattern-matching nature. It learns to mimic human reasoning patterns without gaining a structured memory for intermediate steps.

This behaviour leads to the biggest risk: confident incorrectness. An AI will almost never say, "I'm not sure." It will provide a detailed, logical-sounding explanation with a critical error in the final number, creating a false sense of security that can lead to poor decisions in finance, health, or engineering.

🙋 AI is an incredible tool for brainstorming, writing, and explaining concepts. But for any task that depends on a precise, verifiable number, you must check its work.

Use the Right Tool for the Job

Think of an AI chatbot as a smart but sometimes careless assistant. It's fantastic for drafting a difficult email to a demanding client or breaking down a complex concept, such as inflation, into simple terms. However, it is not as precise as a calculator.

Our ORCA Benchmark study shows that for any task where the number actually matters, you absolutely must double-check its work. For example:

- If you use it to calculate how many tiles to buy for your bathroom floor, you might end up overspending on excess tiles that will later clutter your basement.

- If it converts cups to milliliters for a recipe, your cake could flop.

- If it figures out the maximum loan you can afford, and it overshoots... That's a costly mistake that will follow you around for years.

Make it a rule to double-check every number an AI gives you.

For your money, your recipes, your health data — every calculation that impacts your real-world decisions — you should double-check and verify. Use the right tool for the job.

You wouldn't trust a chef to be your doctor, so don't trust a large language model with your math.

Our full study breaks down the performance across all seven domains, provides a detailed analysis of the most common and surprising errors, and offers a comprehensive look at the limitations of today's AI.

Download the complete ORCA Benchmark study to see the full results and understand the risks of relying on AI for math.

Download the Full Study PDF. Also available on the arXiv website.

The ORCA benchmark has been featured widely online, attracting attention for its insights into AI performance and reliability. It has been recognized across major news and finance outlets, highlighting its impact and relevance in understanding how AI handles everyday tasks.

- Euro News

- The Register

- The Hill

- PR Newswire

- Yahoo Finance